Llamar.ai: A deep dive into the (in)feasibility of RAG with LLMs

By Christian Prokopp on 2023-09-27

Over four months, I created a working retrieval-augmented generation (RAG) product prototype for a sizeable potential customer using a Large-Language Model (LLM). It became a ChatGPT-like expert agent with deep, up-to-date domain knowledge and conversational skills. But I am shutting it down instead of rolling it out. Here is why, how I got there and what it means for the future.

From Skepticism to Revelation

Last year, I worked on automated, large-scale data mining in my startup. A lull earlier in the year led me to explore OpenAI. I was sceptical of ChatGPT and LLMs. Davinci was okay but not mindblowing. ChatGPT-3.5 was enticing, but I could not see the benefit initially, especially for the hyped coding support. That changed as I worked more with it, became better at prompting, and started using it for more straightforward problems in languages and tech in which I had little experience. GPT-4, released in March 2023, was another leap. Suddenly, with the right skills - and when the service was up - you could use it in daily work. I used it, teaching myself new skills and helping with tedious parts of my work day. For example, it helped me build my first AI browser extension for personal use.

In late April, I had the idea of combining an autonomous AI with a knowledge management and discovery engine, a combination of Auto-GPT and RAG. What if customers and employees could search across many sources of information like documents, forums, blogs, documentation, web search, etc., and get a tailor-made answer with the AI autonomously choosing information and steps to answer? The feedback I got from friends in various industries on the product idea was thoroughly positive. But could it be done? I decided to explore the idea.

Inception

I dove into options like open vs. commercial LLMs, building from scratch, fine-tuning, or using in-context learning. For the next two weeks, on the side, I explored options like early-day local open LLMs or tuning ChatGPT. Ultimately, I decided that in-context learning was the most promising approach since the rest was too complex, expensive, and immature. In a nutshell, in-context learning consists of querying an index of corpora for relevant text snippets and sending them along with the user query to the LLM. It can then use the information in the context and its general knowledge to answer the question. It is a straightforward and effective method of adding current and in-depth information to an LLM.

I had a working prototype in May using LangChain, LlamaIndex and ChatGPT running in-context learning. I used a software company's openly available documentation as the corpus, hoping to spark their interest. I spent a lot of time researching first and then a lot of time wrestling with LangChain. The results were encouraging and exciting. The company I used the documentation of and contacted was interested in seeing more and willing to give feedback.

Pivot and Transition Challenges

I needed to make changes first. LangChain was great for prototype examples. However, more elaborate use cases suffered from unnecessary complexity, and it overlaps with LlamaIndex in functionality. I did not want to reinvent the wheel, but it became too arduous. I decided to replace LangChain and most of LlamaIndex and build a custom version. The basic functionality was not that hard to replace. Llamar.ai was born.

I planned for Llamar to evolve into a SaaS platform in the midterm. It is the delicate balance between building an MVP swiftly and economically while avoiding a complete rebuild if it is successful. Most startups have been there.

Furthermore, I needed to replicate the broader corpus of the company I targeted to explore the full capabilities and potential issues. I scraped and indexed tens of thousands of forum posts and hundreds of blog posts, multiple software documentation versions and added a web search. I call these capabilities plugins. Depending on user queries, Llamar could choose autonomously between plugins using a ReAct (Synergizing Reasoning and Acting in Language Models) behaviour. One of the more complex features I built, the ability to consider and select a plugin, was superseded by Open AI's "Function Calling". It was a fast-moving space.

Technical Challenges

A core challenge for RAG is information retrieval, i.e., finding relevant snippets from the corpus. Firstly, you have to translate everything into parsable natural language text. Second, you have to slice it in a usable fashion. There are absolute technical limitations in context size and pros and cons in smaller sizes. Accuracy, completeness, and comprehension for the LLM play prominent roles. Moreover, mixing structured and unstructured information can be highly beneficial if available. For example, if you have different product versions, can you identify and utilise them to avoid confusing your LLM and the user with answers that mix things up? How about metadata? How does relevancy change with the age of a piece of information depending on the question? Anyone working in information retrieval will have flashbacks.

The most significant problem is context window limits, though. GPT3.5, the default version for most because of its speed and affordability, had 4k tokens equivalent to ~3k words. That is not much, considering that you have to instruct the model with expected behaviour, give it as many text snippets with context as possible, and leave space for the question, some overhead and the response, which could be lengthy. Worse, suppose you want to converse, i.e. respond to an answer with follow-up questions. In that case, you must spare context to include the previous information.

LLMs are stateless, i.e. each interaction is from scratch. LLMs simulate a follow-up or conversation by receiving the previous history and your latest query in a call without knowledge of prior interactions. I experimented with rolling summarisation and intelligently dropping the least relevant information from context, which led to good results with enough 'memory' to work for multiple follow-ups and conversational interactions.

Later, a GPT3.5 16k version was released, costing twice as much per token. So if you double your context to 16k, you pay eight times as much. Moreover, the long context window LLMs struggle to maintain attention, as the 'Lost in the Middle' paper demonstrated.

At the same time, I had to build a website so users could interact with it and deploy it in the cloud in an affordable yet scalable manner. It should be simple and avoid needing a rewrite if it takes off.

Here, ChatGPT was once again profoundly helpful. Technology has changed since the last time I built a web frontend, and I had to learn a new stack from scratch. Of course, the solution was imperfect, but it worked, and I could progress without much delay and evolve it as I went along. The result was a presentable, scalable, containerised, cloud-based Web UI and API for Llamar trained on a broad corpus covering many years of blog posts, dozens of forum topics and two distinct documentation versions.

Financial Realities

The feedback on Llamar's capabilities was overall positive. It autonomously could pick plugins and combine information from multiple sources according to the query. For example, if you were to ask about security features of a product it might retrieve information from relevant blog posts and documentation pages and respond specifically to your question based on the information and in natural language. Additionally, you could follow up and converse like in a chat with an expert. Simply put, it is a ChatGPT-like agent but with up-to-date and deep domain knowledge.

A few challenges emerged. It needed to reference the source of what it found in the vector indices, which I quickly added. Harder was an issue of blending versions of information. Because the corpora reach back many years and versions but are not always clearly indicated or inferable, e.g. old blog posts presumed a version, new documentation might reference old documentation and forum posts have dozens of topics and even within a topic cross-reference. Combine it with the ability of LLMs to make anything sound reasonable, and you risk confusing more than informing. Another problem remained context window size. The chance to retrieve enough relevant information to cover a question and give a comprehensive answer depends mainly on context size.

Luckily, there are various ways to counter most issues, e.g. reducing the choice of corpora, defaulting to expected versions, biasing retrieval to relevant information, leveraging metadata and more. The context size turned out to be the defining issue. The obvious solution was to expand the choice of models to the recently available GPT3.5 with a 16k window and GPT4 with an 8k window. That had the side-effect of adding ChatGPT4's superior quality into the evaluation.

The many changes were significant improvements. Notably, the model changes to larger context windows were essential, with GPT4 having a noticeable edge in quality. Importantly, using the whole GPT3.5-16k window led to the attention drop of LLMs, where they overemphasise the start and the end of a context and ignore the middle. Something that I managed with smarter prompting. These changes were done iteratively over some time. Ultimately, I was reasonably confident that with GPT4 and the improvements, the MVP was ready for further testing with a broader audience and likely would do the job I designed it for well.

Regrettably, only GPT4 was adequate for the use case but didn't prove viable. Comparing GPT3.5 to GPT4, API calls have a 20x (not a typo) price disparity. The prospective client aims for hundreds of thousands of monthly user queries. The difference is USD 5,000 vs. USD 100,000 in monthly ChatGPT bills. So, one model is feasible but inadequate, and the other is adequate but infeasible. The value proposition and cost do not line up.

Alternatives

Of course, I reviewed options to mitigate the issue. Changing the amount Llamar would send to the API can't change much because it is already optimised. Fewer users or interactions would mean lower costs but also lower price expectations. Building some alternative way of interacting that won't need the API changes the nature of the product to make it something else. Using open models and services is not viable. The ones I looked into are, at best, GPT3.5 performance (not good enough) and twice the cost.

It leaves betting on the future, i.e. build it and hope the market drops prices and makes things competitive. I doubt that it happens in a meaningful timeframe. OpenAI struggled with ChatGPT4 as a service for months, and from what we read in the press, they struggle with making it cost-effective. A 95% (not a typo) drop in price seems a long way in the future. Seeing how open models are priced and perform, there are no alternatives soon. Maybe Alphabet, Meta or Amazon have a surprise waiting, but the magnitude of change needed makes me doubt that.

Conclusion

Thinking back to earlier this year, no one knew how LLMs (open and closed) would progress in performance and cost. Importantly, I did not know if I could build Llamar and make it work with GPT3.5. Using LLMs in real applications is undoubtedly complex and challenging but also exciting, adding novel capabilities.

What is the future of Llamar? Unless there is a surprise breakthrough, the described use case is infeasible. However, there are alternative routes:

- Lower frequency, higher value use cases

- New products with fewer API calls

- Mini AI apps to solve specific niche problems

- Serendipity, find novel ways for the technology and insights

- Prices could drop and prove me wrong

I have ideas and am exploring 1-3 and am keeping my eyes and ears open for 4-5.

I enjoyed spending the summer building Llamar.ai. I learned a lot, which may lead to other products and opportunities. As a solo entrepreneur, I previously created a globally distributed multi-cloud data mining platform that collected hundreds of millions of products and billions of updates, and now an interactive LLM-based agent that does generative searches across many corpora. If you are interested in my work or have suggestions, contact me blog@bolddata.biz.

Christian Prokopp, PhD, is an experienced data and AI advisor and founder who has worked with Cloud Computing, Data and AI for decades, from hands-on engineering in startups to senior executive positions in global corporations. You can contact him at christian@bolddata.biz for inquiries.

Related Posts

Introducing Tax Shrink

2024-03-14

Tax Shrink is a new online tool that helps owner-operators of Limited companies in the UK calculate and visualise the ideal salary-to-dividend rati...

How to Build Training Datasets and Fine-tune ChatGPT

2023-11-29

Large-language models (LLMs) are great generalists, but modifications are required for optimisation or specialist tasks. The easiest choice is Retr...

The Power of Schema Enforcement in Delta Lake

2023-02-15

Prevent errors and inconsistencies with Delta Lake's robust data management technology.

A poem about Data

2022-12-05

Data is the root of all my worries ...

Free Amazon bestsellers datasets (May 8th 2022)

2022-05-10

Get huge, valuable datasets with 4.9 million Amazon bestsellers for free. No payment, registration or credit card is needed.

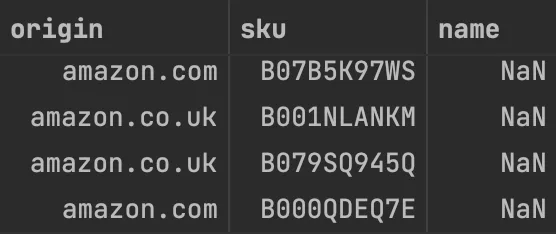

Bad Data: Nameless Amazon bestsellers

2022-05-03

Many Amazon marketplace customers know that its huge product catalogue has data quality issues. However, they might expect its top sellers, which t...